Table of Contents

- Table of Contents

- Introduction

- Specification

- Compatibility

- Toolchain Support

- Profiling

- Webpack TLA Runtime

- Can We Use TLA Now?

- Summary

- Next Steps

- In Conclusion

- Further Updates

- Refs

Introduction

Inside ByteDance, users of the Mobile Web Framework we built based on Rsbuild have encountered issues with the Syntax Checker:

In response to such problems, our first thought is that it might be caused by third-party dependencies. This is because the builder does not compile *.js|ts files under node_modules by default for performance reasons[1]. Users may depend on third-party dependencies containing async/await, leading to a final compilation error. Consequently, we suggest developers use source.include to Downgrade third-party dependencies:

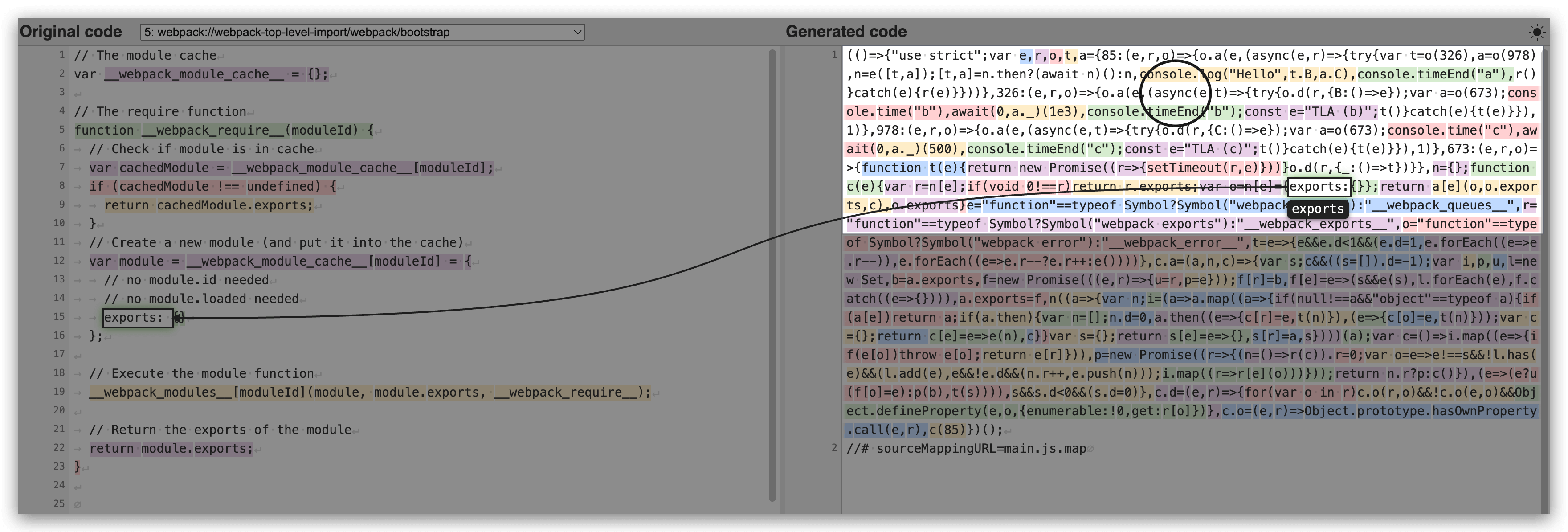

Interestingly, this problem is not what we initially imagined. When we used Source Map Visualization to locate the issue, we found that the position of async was white —— there was no source code mapped to it:

Upon further analysis, we discovered that this async was introduced by the Webpack compilation TLA (Top-level await) injected Runtime. Under such circumstances, we continued to investigate TLA.

In this article, we will conduct a more in-depth and comprehensive analysis of TLA's Specification, Toolchain Support, Webpack Runtime, Profiling, Availability and so on.

Specification

We can learn about the latest standard definition of TLA from ECMAScript proposal: Top-level await. The original intention of TLA design comes from await being only available within async function, which brings the following problems:

-

If a module has an

IIAFE(Immediately Invoked Async Function Expression), it may causeexportsto be accessed before the initialization of thisIIAFEis complete, as shown below: -

To solve the problem in 1, we might need to export a Promise for upper stream consumption, but exporting a Promise will obviously require the user to be aware of this type:

Then, we can consume like this:

This raises the following issues[2]:

- Every dependent must understand the protocol of this module to use it correctly;

- If you forget this protocol, sometimes the code might work (due to

racewin), and sometimes it won't; - In the case of multi-layer dependencies, Promise needs to run through each module ("Chain pollution?").

For this reason, Top-level await is introduced. The way modules are written can be changed to:

A typical use case is to solve the problem of "Dynamic dependency pathing", which is very useful for scenarios such as Internationalization and Environment-based Dependency Splitting:

More use cases can be found here.

Compatibility

According to Can I Use, we can use TLA in Chrome 89 and Safari 15, and Node.js also officially supports TLA from v14.8.0:

You can quickly copy this code into your Chrome Devtools Console panel or Node.js command line for execution:

This is the effect of native support for TLA. However, since this is a newer ECMAScript feature, it is currently difficult (as of 2023) to use it directly in mobile UI code. If you want to use it in UI code at present, you still need to rely on compilation tools. In the next section, we will introduce the "compilation behavior" and "compatibility of the produced artifacts" of common toolchains.

Toolchain Support

Prerequisites

In order to standardize the benchmark for testing compilation behavior, we agree on the following Minimal Example for testing:

Unfold the original code

The minimum repositories for each tooling are available at TypeScript (tsc) | esbuild | Rollup | Webpack. There is no example created for bun here because bun does not need any configuration and can be tested for packaging by running bun build src/a.ts --outdir ./build --format esm in any repository.

TypeScript (tsc)

In tsc, TLA can only be successfully compiled when the module is es2022, esnext, system, node16, nodenext, and the target >= es2017, otherwise, the following error will occur:

After successful compilation, you can see that the output and the source code are almost identical:

Since tsc is a transpiler and does not have bundle behavior, no additional Runtime will be introduced for TLA under tsc. In other words, tsc does not consider the compatibility of TLA. You can go to the Profiling section to understand how to run this output.

esbuild

esbuild currently can only successfully compile TLA when format is esm, and target >= es2022 (this aligns with tsc's module rather than target). This means that esbuild itself only handles successful compilation and is not responsible for the compatibility of TLA:

|

|

|---|

After successful compilation, the products are as follows:

As we can see, the output here directly tiles all the modules —— this seems to have changed the original semantics of the code! This can be confirmed in the Profiling section.

Regarding TLA support in esbuild, the response from esbuild author @evanw is[4]:

Sorry, top-level await is not supported. It messes with a lot of things and adding support for it is quite complicated. It likely won't be supported for a long time. Sorry, TLA is not supported. It affects many things and adding support for it is quite complicated. It may not be supported for a long time.

Rollup

Rollup can only successfully compile TLA when format is es or system, otherwise the following error will occur:

The term es here changes the semantics just like esbuild does when generating es bundles, which will not be elaborated here. For system, by reading the SystemJS document, SystemJS supports modules being defined as an Async Module:

execute: AsyncFunction- If using an asynchronous function for execute, top-level await execution support semantics are provided following variant B of the specification.

Therefore, there will be no special behavior in Rollup, it just wraps TLA in the execute function, so Rollup itself does not have more Runtime level processing on TLA. There is an issue[4] about Rollup supporting TLA under iife, you can go to https://github.com/rollup/rollup/issues/3623 for more information.

Webpack

TLA began to be supported in Webpack 5 earliest, but it needs to be enabled by adding experiments.topLevelAwait in the Webpack configuration:

Starting with 5.83.0, Webpack turns on this option by default. However, if you simply write a piece of TLA test code in Webpack for compilation:

You'll find that you encounter the following compilation error:

By searching for related Issue (webpack/#15869 · Top Level await parsing failes), we can see that, under default conditions, Webpack will consider those modules without import / export as CommonJS modules. This logic is implemented in HarmonyDetectionParserPlugin.js:

In summary, the conditions for successful TLA compilation in Webpack are as follows:

- Ensure experiments.topLevelAwait is set to

true; - Make sure that a module using TLA has an

exportstatement and can be recognized as an ES Module (HarmonyModules).

For more on how Webpack handles TLA's runtime process, refer to the Webpack TLA Runtime section.

bun

bun build currently supports esm only, which means that bun will also compile TLA into the generated files without any modifications. It does not consider compatibility and only focuses on running in modern browsers.

Profiling

In this section, we will first explain how to run products from various toolchains, then discuss their execution behavior in conjunction with profiling.

In Node.js

Firstly, a module that relies on TLA must be an ES module. If we use Node.js to run it, we will encounter various problems with executing ES modules in Node.js. Considering the output of tsc scenarios consists of multiple ES module modules rather than a single one, this case is the most complex. Therefore, this section will use Node.js to execute the products generated by tsc.

Question:.mjs ortype: module?

If you try to run the product generated by tsc directly using the command node esm/a.js, you'll encounter the following problem:

According to https://nodejs.org/api/esm.html#enabling:

Node.js has two module systems: CommonJS modules and ECMAScript modules. Authors can tell Node.js to use the ECMAScript modules loader via the

.mjsfile extension, the package.json"type"field, or the--input-typeflag. Outside of those cases, Node.js will use the CommonJS module loader.

Here, we choose not to modify the product to .mjs, but to add "type": "module" in package.json:

Question: Missing.js extension intsc output code

After solving the previous issue, we encountered the following problem:

According to https://nodejs.org/api/esm.html#import-specifiers:

Relative specifiers like

'./startup.js'or'../config.mjs'. They refer to a path relative to the location of the importing file. The file extension is always necessary for these.

That is to say, when loading ES Module in Node.js, it's necessary to include the extension. However, the output of tsc doesn't include the .js extension by default. According to TypeScript documentation and related guides[5], we made the following modifications:

- Change

compilerOptions.moduletoNodeNext. This is another long story, which we won't expand on here. - Modify all

import "./foo"toimport "./foo.js".

There's another solution for the

.jsextension issue: running node with--experimental-specifier-resolution=node. But this flag has been removed from the documentation in the latest Node.js 20, hence its use is not recommended.

Finally, the above code can run successfully. The final fix commit can be found here.

Performance

The output when running time node esm/a.js is as follows:

As you can see, the entire program only took 1.047s to run, which means that the execution of b.js (sleep 1000ms) and c.js (sleep 500ms) is concurrent.

In Chrome

Starting from version 89, Chrome supports TLA. You can quickly run a snippet of TLA example code as demonstrated at the beginning of this document. However, to test the native behavior of "mutual references" as shown in the example, we decided to execute the output generated in the browser as in the previous section Toolchain Support > tsc. First, create an .html file:

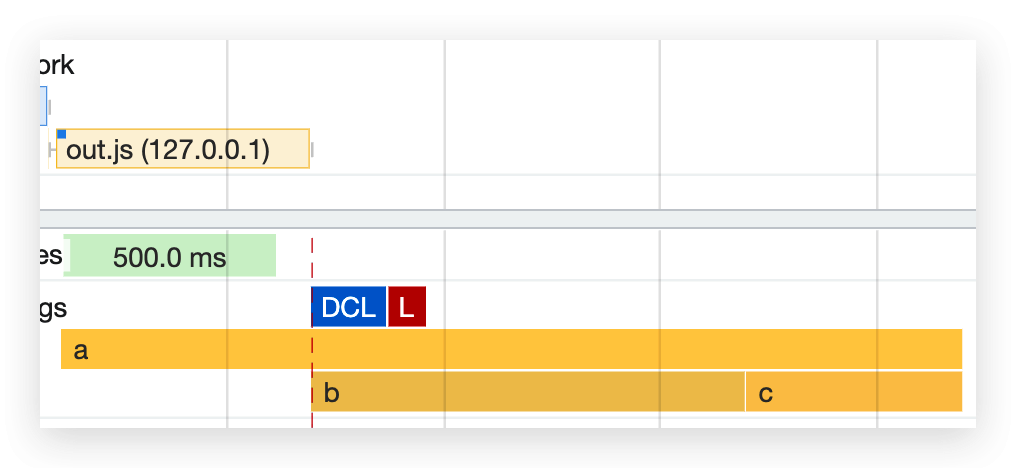

In order to better observe the runtime behavior, we have used console.time for marking in the code, and you can see the runtime sequence as follows:

As you can see, the load and execution of b.js and c.js are concurrent!

Result

Ignoring the time consumed by resource loading, the synchronous execution time of b.js (sleep 1000ms) and c.js (sleep 500ms) is 1.5s, while the parallel execution time is 1s. Based on the previous testing techniques, we tested the products of several scenarios and the report is as follows:

| Toolchain | Environment | Timing | Summary |

|---|---|---|---|

tsc |

Node.js | node esm/a.js 0.03s user 0.01s system 4% cpu 1.047 total | Execution of b and c is concurrent |

tsc |

Chrome |  |

Execution of b and c is concurrent |

es bundle |

Node.js | node out.js 0.03s user 0.01s system 2% cpu 1.546 total | Execution of b and c is synchronous |

es bundle |

Chrome |  |

Execution of b and c is synchronous |

Webpack (iife) |

Chrome | node dist/main.js 0.03s user 0.01s system 3% cpu 1.034 total | Execution of b and c is concurrent |

Webpack (iife) |

Chrome |  |

Execution of b and c is concurrent |

To sum up, although tools like Rollup/esbuild/bun can successfully compile modules containing TLA into es bundle, their semantics do not comply with the semantics of TLA specification. The existing simple packaging methods will turn the originally concurrent execution module into synchronous execution. Only Webpack simulates the semantics of TLA by compiling to iife and then adding the complex Webpack TLA Runtime, which means that in terms of packaging, Webpack appears to be the only Bundler that can correctly simulate TLA semantics.

TLA Fuzzer

In the previous section, we verified the support for TLA semantics from various toolchains in a relatively primary way. In fact, @evanw, the author of esbuild, created a repository tla-fuzzer to test the correctness of TLA semantics for various packagers, which further validates our conclusion:

Fuzzing is done by randomly generating module graphs and comparing the evaluation order of the bundled code with V8[6]'s native module evaluation order.[7]。

Webpack TLA Runtime

As only Webpack handles the semantics of TLA packaging correctly, this section will analyze Webpack's TLA Runtime.

Basic Example

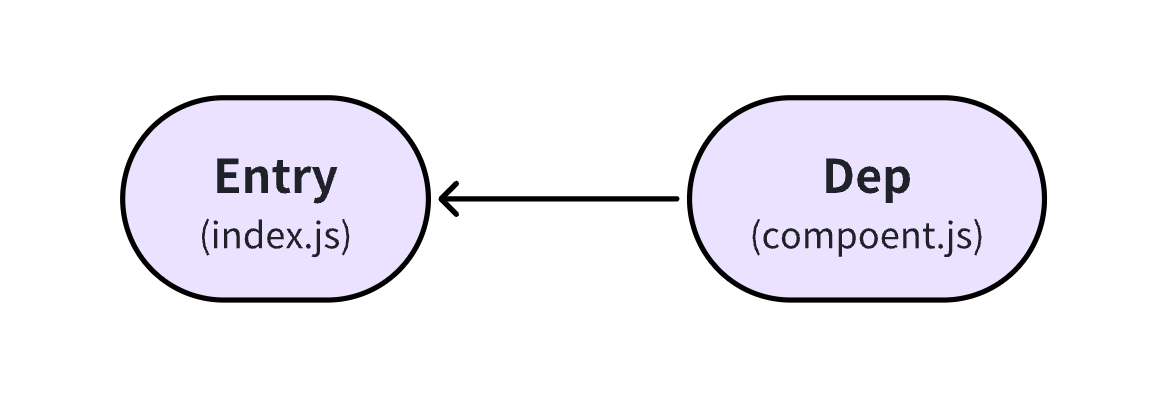

First, we recall that in case of an Entry without any Dependency, the build product of Webpack will be quite simple:

Input

Output

When we use Top-level await:

Input:

Output

Due to space limitations and the lengthy output, you can visit TLA Output as the output has been externalized. As we can see, using TLA will make the build product more complex, further analysis will follow.

Here we can boldly guess that the compiled product of Webpack looks like a Polyfill of the original JS Runtime at the Bundler level.

Overall Process

Overall, the process starts with Entry as the entry point, executes the Entry module through __webpack_require__(), then loads dependencies through __webpack_handle_async_dependencies__(). The loading of dependencies is exactly the same as that of Entry. If a dependency has its own dependencies, these need to be loaded first. After the dependencies are loaded and their exports are obtained, the current Module can be executed. After execution, __webpack_async_result__() will be called for callback, allowing the dependent modules to continue execution.

The essence of runtime here is completely consistent with the dependency relationship, the initial loading of dependencies is synchronous. When the loading of the end dependencies is finished, it returns exports to the upper layer dependencies. Only then can the upper layer dependencies start executing, continue to return exports upwards, and eventually when all dependencies of Entry are loaded, the code of entry itself begins to execute:

As you can see, without TLA, the process would be quite simple, just a synchronous DFS. However, once the loading of Dep is asynchronous, it becomes an asynchronous DFS, involving complex asynchronous task processing. Next, we will detail the operation process of Webpack TLA Runtime.

Basic Concepts

Prerequisites

In order to explain the running process of Webpack TLA Runtime, we have re-created a smaller Example for analysis:

Let's clarify some basic concepts and give aliases to the modules in this example:

| File | Uses TLA? | Alias | Notes |

|---|---|---|---|

index.js |

No | Entry | index.js is a Dependent of component.js; component.js is a Dependency of index.js |

component.js |

Yes | Dep |

The Compilation Process of Webpack

In order to better understand the internal principles of TLA, we also need to briefly understand the main compilation process of a single Webpack:

newCompilationParams: CreateCompilationinstance parameters, the core function is to initialize the factory methodModuleFactoryused to create module instances in subsequent build processes;newCompilation: Truly create aCompilationinstance and mount some compiled file information;compiler.hooks.make: Execute the real module compilation process (Make), this part will build entries and modules, runloader, parse dependencies, recursive build, etc.;compilation.finish: The final stage of module construction, mainly for further sorting of the inter-module dependency relationship and some dependency metadata, to prepare for subsequent code stitching;compilation.seal: Module freezing stage (Seal), start to stitch modules to generatechunkandchunkGroup, to generate product code.

Webpack Runtime Globals

During the Seal phase, final resulting code is generated by concatenating templates based on the runtimeRequirements information in Chunk. These templates rely on certain global variables which are defined in lib/RuntimeGlobals.js in Webpack:

Artifact Analysis

Next, let's start analyzing the previously generated artifact.

Loading Entry

Firstly, the executed entry point is as follows:

__webpack_require__ is defined as follows:

We can see that:

__webpack_require__is a completely synchronous process;- Loading of

Async Dependencyoccurs during the Module loading execution phase;

Execution of Entry

As you can see:

- Since Entry depends on a Dep using TLA, Entry will also be defined as an asynchronous module, here

__webpack_require__.ais used to define the asynchronous module. - TLA is contagious, modules dependent on TLA would be recognized as

Async Module, even if they don't have TLA themselves.

Therefore, the core dependencies are as follows:

__webpack_require__.a: DefinesAsync Module.__webpack_handle_async_dependencies__: Loads asynchronous dependencies.__webpack_async_result__: Callback whenAsync Moduleloading ends.

Among these, __webpack_require__.a deserves special mention.

__webpack_require__.a

__webpack_require__.a is used to define an Async Module. The related code analysis is as follows:

When __webpack_require__.a is executed, the following variables are defined:

| Variable | Type | Description |

|---|---|---|

queue |

array |

When the current module contains an await, queue will be initialized to [d: -1]. Therefore, in this example, Dep will have a queue and Entry will not. For more details about the state machine of queue, see queue. |

depQueues |

Set |

Used to store the queue of Dependency. |

promise |

Promise |

Used to control the asynchronous loading process of the module, and is assigned to module.exports. It also transfers the power of resolve/reject to the outside to control when the module loading ends. After the promise is resolved, the upper layer module will be able to obtain the exports of the current module. For more details about promise, see promise. |

After completing some basic definitions, it will start executing the body of the Module (body()), passing:

__webpack_handle_async_dependencies__- **

__webpack_async_result__

These two core methods are given to the body function. Note that the execution within the body function is asynchronous. When the body function starts to execute, if queue exists (i.e., inside the TLA module) and queue.d < 0, then queue.d is set to 0.

queue

This is a state machine:

- When a TLA module is defined,

queue.dis set to-1. - After the body of the TLA module has finished executing,

queue.dis set to0. - When the TLA module has completely finished loading, the

resolveQueuemethod will setqueue.dto1.

promise

The above promise also mounts 2 additional variables that should be mentioned:

[webpackExports] |

It indirectly references module.exports, so **Entry can get Dep's exports through promise. |

|---|---|

[webpackQueues] |

1. Entry and Dep hold each other's states; 2. When Entry loads the dependency ([Dep]), it passes a resolve function to Dep. When Dep completely finishes loading, it calls Entry's resolve function, passing Dep's exports to Entry. Only then can Entry's body begin execution. |

resolveQueue

resolveQueue is absolutely one of the highlights in this Runtime. After the execution of a module's body, the resolveQueue function will be called, with implementation as follows:

Complex Example

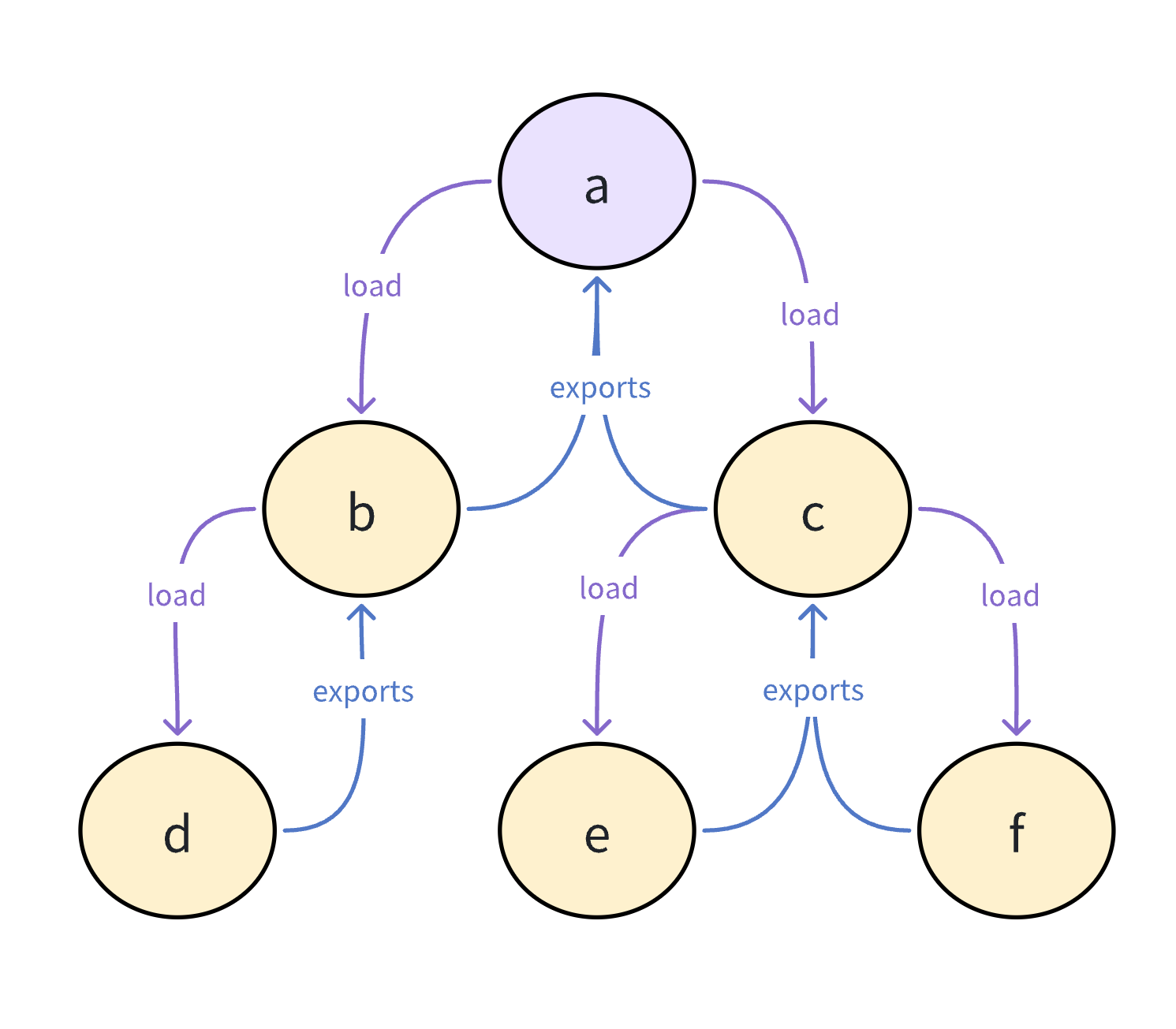

If the dependency relationship shown in the left figure, where modules d and b include TLA(Top-Level Await), then:

aandcwill also become Async Modules due to the propagation issue of TLA.- The Moment When a Module Starts to Load: That is when

__webpack_require__is called, here DFS(Depth-First Search) will be performed based on the order of import. Suppose imports inaare as follows:Then, the loading sequence isa —> b —> e —> c —> d. - The Moment When a Module Finishes Loading:

- If load time

d > b, then the moment when modules finish loading isb —> d —> c —> a. - If load time

d < b, then the moment when modules finish loading isd —> c —> b —> a. - Sync Module

ais not considered here becauseahas finished loading at the time of loading. - In a module graph with TLA, Entry will always be an

Async Module.

- If load time

Source of Complexity

If we carefully read the ECMAScript proposal: Top-level await, we can see a simpler example to describe this behavior:

Which roughly equals to:

This example has inspired the construction of some bundleless toolchains, such as vite-plugin-top-level-await. The complexity of supporting TLA compilation to iife at the Bundler level mainly comes from: We need to merge all modules into one file while maintaining the aforementioned semantics.

Can We Use TLA Now?

The Runtime mentioned previously is injected by inline scripts in the Seal phase. Since Seal is the final stage of module compilation and can no longer undergo the Make phase (won't run loaders), the concatenated template code must take compatibility into account. In fact, this is the case, Webpack internal Templates consider compatibility, for instance:

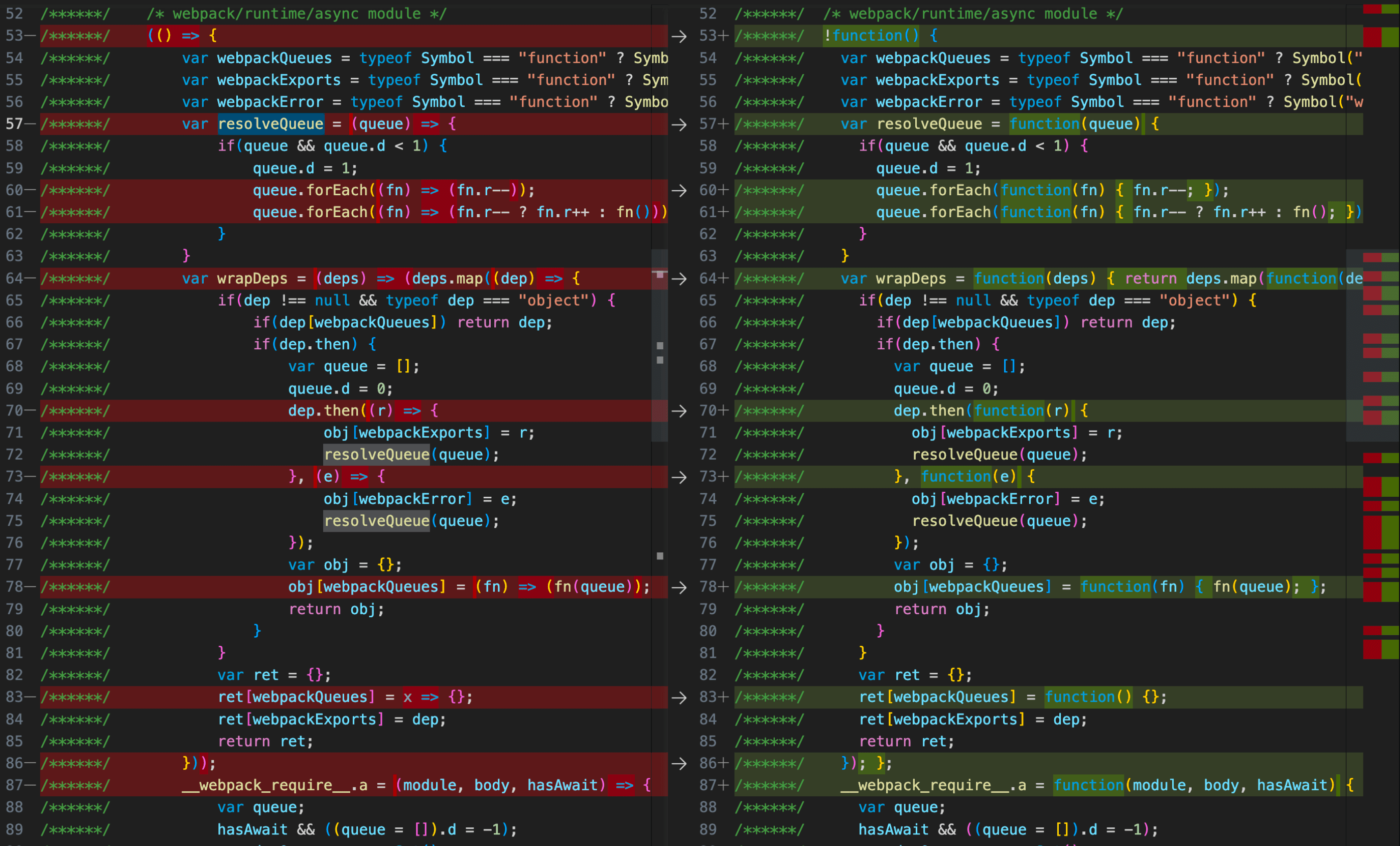

When we switch the target between es5 or es6, you will see a noticeable difference in the output:

Left side

target: ['web', 'es6']; right sidetarget: ['web', 'es5']

However, Top-level await does not follow this principle. In webpack#12529, we can see that Alexander Akait once questioned the compatibility of async/await in Template, but Tobias Koppers responded that it was very difficult to fix:

Therefore, this implementation has been retained in Webpack, and TLA has become one of the few features that cause compatibility issues with Runtime Template in Webpack.

In fact, it can be understood here that if the Template depends on async/await, then if you want to consider compatibility, you need to consider introducing regenerator-runtime or a more elegant state machine-based implementation like in tsc (See: TypeScript#1664). A former intern from Web Infra has also attempted to implement this (See: babel-plugin-lite-regenerator).

That is to say, the compilation of TLA by Webpack, due to the fact that the output still contains async/await, leads to it only being runnable on iOS 11 and Chrome 55 machines:

| Top-level await's Compatibility | - Chrome 89 - Safari 16 |

|

|---|---|---|

| Expected Compatibility(Compiled to ES5) | - Chrome 23 - Safari 6 |

|

| Actual Compatibility (i.e. async / await) |

- Chrome 55 - Safari 11 |

|

Summary

-

The inception of TLA was intended to try to solve the async initialization problem of ES Module;

-

TLA is a feature of

es2022, it can be used in versions above v14.8.0. If you need to use it in UI code, you need to pack with Bundler; unless you will directly use es module in frontend projects, generally, you need to package intoiife; -

Most Bundlers can successfully compile TLA when the target format is

esm, but only Webpack supports TLA compilation intoiife. Also, Webpack is the only Bundler that can correctly simulate the semantics of TLA. -

Although Webpack can package TLA into

iife, because the product still containsasync/await(although not TLA), it can only run on machines withiOS11 / Chrome 55. At present, for some large companies' Mobile Web C-end businesses, compatibility may be required to be set to iOS 9 / Android 4.4. Therefore, for stability considerations, you should not use TLA in C-end projects. In the future, you should try TLA based on business needs; -

In terms of Webpack implementation details, just as

awaitrequires being used in anasync function, TLA will cause Dependent to also be treated as an Async Module, but this is imperceptible to developers.

Next Steps

Having read this far, there are still some additional questions worth further research:

-

How does JS Runtime or JS virtual machine implement TLA;

-

What happens when an Async Module fails to load in a TLA supported natively by the JS Runtime or JS virtual machine? How to debug?

In Conclusion

The author of Rollup, Rich Harris, previously mentioned in a Gist Top-level await is a footgun 👣🔫:

At first, my reaction was that it's such a self-evidently bad idea that I must have just misunderstood something. But I'm no longer sure that's the case, so I'm sticking my oar in: Top-level

await, as far as I can tell, is a mistake and it should not become part of the language.

However, he later mentioned:

TC39 is currently moving forward with a slightly different version of TLA, referred to as 'variant B', in which a module with TLA doesn't block sibling execution. This vastly reduces the danger of parallelizable work happening in serial and thereby delaying startup, which was the concern that motivated me to write this gist.

Therefore, he began to fully support this proposal:

Therefore, a version of TLA that solves the original issue is a valuable addition to the language, and I'm in full support of the current proposal, which you can read here.

Here we can also take a look at the history of TLA in the ECMAScript proposal: Top-level await:

- In January 2014, the

async / await proposalwas submitted to the committee; - In April 2014, the discussion was about reserving the keyword await in modules for TLA;

- In July 2015,

async / await proposaladvanced to Stage 2, during which it was decided to postpone TLA to avoid blocking the current proposal; many committee members had already started discussing, mainly to ensure that it is still possible in the language; - In May 2018, the TLA proposal entered the second phase of the TC39 process, and many design decisions (especially whether to block "sibling" execution) were discussed during this stage.

How do you see the future of TLA?

Further Updates

Rspack officially backs TLA from v0.3.8, verified using Fuzzer.

Rspack is a high-performance JavaScript bundler based on Rust, boasting powerful interoperability with the webpack ecosystem. Recently, Rspack incorporated TLA (Top Level Await) into v0.3.8.

It's worth mentioning that Rspack achieved consistent results with Webpack in TLA Fuzzer testing[9]:

With this in mind, we can add Rspack to the list of Bundlers that can correctly simulate TLA semantics!

Refs

[1]: https://rsbuild.dev/config/options/source.html#sourceinclude[2]: https://github.com/tc39/proposal-top-level-await

[3]: https://github.com/evanw/esbuild/issues/253

[4]: https://github.com/rollup/rollup/issues/3623

[5]: https://www.typescriptlang.org/docs/handbook/esm-node.html

[6]: https://v8.dev/features/top-level-await

[7]: https://github.com/evanw/tla-fuzzer

[8]: https://gist.github.com/Rich-Harris/0b6f317657f5167663b493c722647221

[9]: https://github.com/ulivz/tla-fuzzer/tree/feat/rspack